Introducing Testinium SUITE

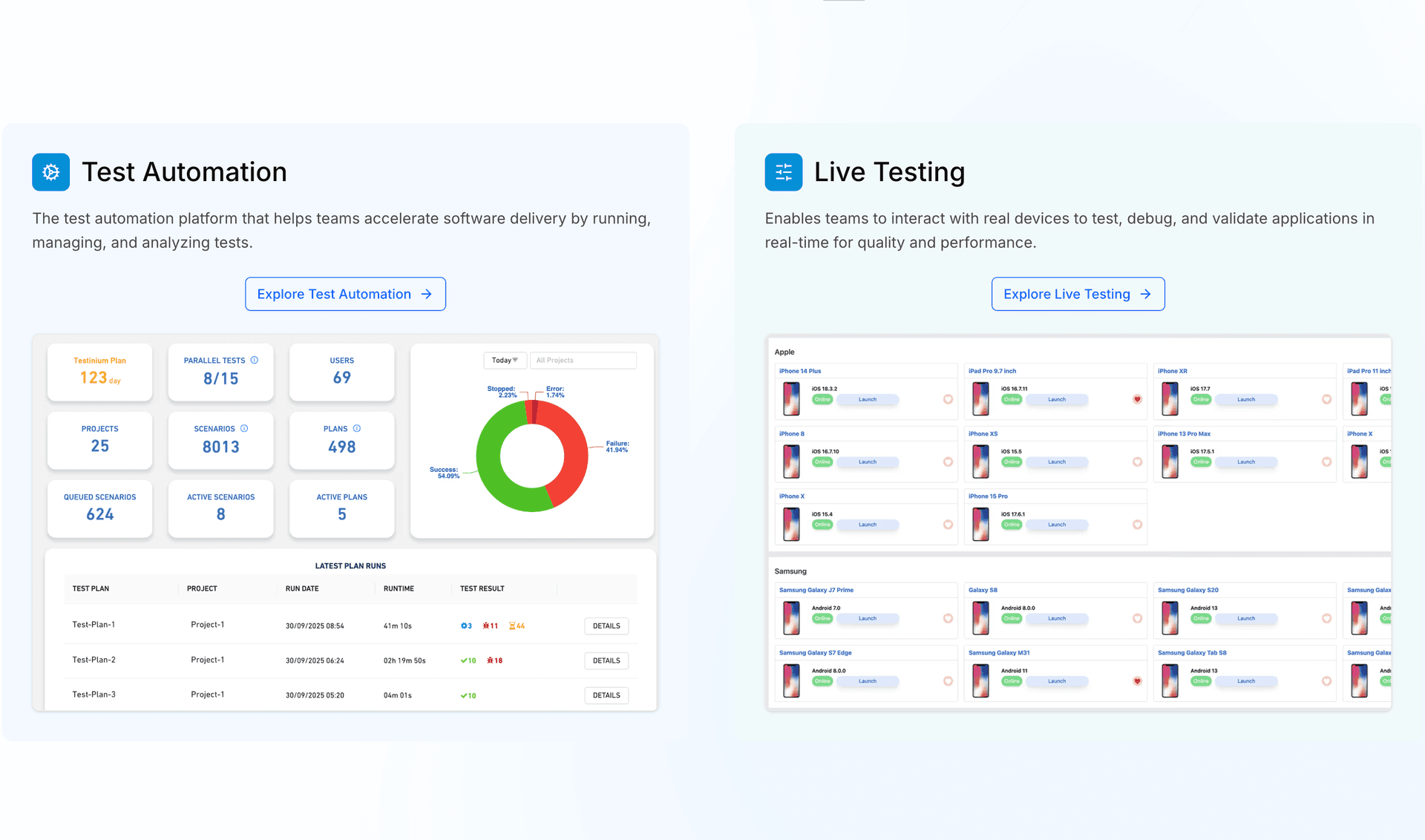

A unified platform for modern software testing We are excited to announce that our core product, previously known simply as Testinium, has evolved int

Read More

A unified platform for modern software testing We are excited to announce that our core product, previously known simply as Testinium, has evolved int

Read More

We're proud to announce that Testinium has been named as a Representative Vendor in the 2025 Gartner Market Guide for Quality Engineering Services. As

Read More

Testinium has proudly secured its position among Turkey’s 50 fastest-growing technology companies, as recognized by Deloitte! This remarkable achievem

Read More