Flaky Tests and How to Reduce them

In the last few decades, the tech world has been evolving with such an increasingly high rate. Due to this fact, even keeping up with the changes poses great challenges. Especially for software development and testing purposes, the changes are too risky to dismiss. With the booming mobile industry came the challenges of having an understanding of different mobile operating systems and being able to deal with them. With the blossoming of various browsers both for mobile devices and for computers rose the issues of changing mechanisms. Building scalable and configurable automated tests are crucial and require much more tedious work. Tests that are not always reliable, namely flaky tests, cause testing processes to fail, or they might also slow down or even halt the development processes software businesses have.

Any changes in an application in the user interface (UI) may cause the automated tests to fail randomly, and this unreliability is unacceptable for testers. The unreliability causes fluctuations in test results. These flaky tests have to be maintained regularly for every test done or have feedback loops so that every steps or change of the tests are done correctly. These maintenances or feedback loops usually end up slowing down the process of development drastically. And to be able to have a successful development, tests have to be taken into consideration with their fluctuation rates.

Although test results can be corrected to some point by taking the fluctuation rates int consideration, the results may not be as reliable since the pass rate, the rate of the test instances that passed against all test instances done is only a probability that does not reassure the business against failing tests. Only one test will surely pass provided it has a high pass rate, but what about an abundance of instances of the same test?

Say that there is a 500 of the same test that needs to be carried out in a test suite and each test has the same pass rate, 99.7%. Since all tests are not related to the others, the pass rate of the test suite will be the power of the test pass rate, which turns out to be 22.26%. Even at 250 tests, the pass rate is 47.18%. That is unacceptable as a result of testing, and further development of tests must be done in order to decrease flakiness. Increasing the pass rate by just 0.1% increases the pass rate in a suite with 250 tests to 60.62%.

Dealing with these flaky tests is one of the most crucial points of Test Driven Development, and allocating a certain amount of time in the development planning will go a long way. There are no solutions to get rid of these flaky tests entirely. But, there are several methodologies or practices to reduce the number. The main point in reducing the number is understanding why specifically they are flaky.

Reasons for Flakiness

Tests at the UI Layer

When looking down on the project itself, since manual testing has been there for a long time, it is easy to concur that just a test that will click and type in predetermined places on the UI should be enough to test the software. But up close, that is not how anyone should do things. The UI is the most prone to error part of a software. UI keeps changing with every update, every new idea and plan; and the back-end changes with it as well. Every small adjustment to the UI layer means going back to the pre-existing tests and changing them to fit the new version. If some small part is gone uncorrected, the whole project has a much higher failure rate. This makes all tests at the UI layer fragile.

Every test built should be stable, scalable and reusable for a successful test result. With UI layer tests, these objectives are not met, and the tests are prone to be broken. Tests should be interacting with an encapsulation layer rather than the UI element.

Poor Test Data

Sometimes the test data is not able to cover all grounds and is not able to catch the scenarios where the software might fail due to the corner cases. Usually, the data covers the positive and negative scenarios, but the corner cases can be missing. The corner cases are crucially important in a successful development.

Test data should not be a problem to work with the design of the test, meaning when there are tests working independently of each other, the test data must be stored separately so that the data is not corrupted.

When some tests are dependent on the data from other tests, there must be precautions taken so that the test data is not corrupted during test runs, and that the next test can work properly. Test data has to be correct at each point during the test run.

Narrow Scope of Test Environments

Since the current technology causes a vast selection of computers and handheld devices, there are multitudinous parameters which have to be considered for the testing procedures, if they are done at the UI layer. Different operating systems, screen sizes, resolution, different available browsers are all parameters that must be handled.

Considering the back-end part of the software, there are multiple parts to a software that interacts with provided services in devices such as perhaps some sensors or some network across the wire, and with such a complex software, a broader scope is a necessity to include everything thoroughly.

Complex Technology and Software

Newer or updated software use many of the services provided with the devices like the “shake to refresh” feature that when the mobile devices are shaken, refreshes the page. That might seem like too small a detail since it does only one tiny job. However you should consider having 20 different services being used at the same time. They have to work with each other without any errors, but these services may work differently from the others. This may cause them to work out of sync with each other. Such a complex software having to work with so many services that do not function in the same way is a difficult task. For that reason, testing can be even more frustrating. Testers have to consider all complexities into account so that the tests are successful, meaning the tests they will be running are most likely going to be asynchronous.

Which is why software is usually broken down into smaller parts and tested individually. But some tests that need to test multiple parts will have to be executed in some certain sequence. With this procedure, the testing process has to wait for a specific amount of time for the completion of the previous step to get to the next one. A time-out period has to be determined, and if that period is too small, the test will surely fail, and if it is too long, the time is really not well spent. Your tests will be slow in that case.

Reducing Flakiness

Testing Pyramid

It seems straightforward for an automated script to just click on some buttons and fill out some text boxes and check for their behavior with the expected results. But each change in the UI layer during the test may end up altering the actual result. Consequently, the test may fail. These tests are overly dependent on other components.

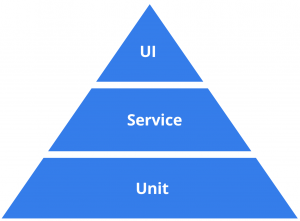

To reduce flakiness, the testing pyramid has to be adopted.

According to the testing pyramid, Unit tests should have the largest volume of percentage-wise. It should be 80-90%. Unit tests are there to check the core functionality of the different parts of a software. The backend part is much more stable, which helps reduce the flakiness of these tests.

Then, the tests for the service layer should have 5-15% allocated. These tests are the automated API or acceptance tests that the business logic such as inputs and outputs are tested. This also does not interact with the UI layer and tests the functions separately.

Finally, coming to the GUI layer, those tests should have a size of 1-5% within all the tests. These tests must be working with the GUI through UI encapsulation. To be able to do this, testers must think about how to implement page objects. What is going to be done on this page, and how does an actual person do it? The steps usually involve clicking some buttons or filling out text boxes. There should be methods set to do all these steps individually. By separating tests to all these steps, when a test fails, it is much easier to understand which part of the test has interrupted the whole test.

Separate Test Data from Design

Considering the same tests are dependent on another if the first test fails, the second would also fail, since the test data for the second test would not be created with the first test. This makes it crucial to have separate test data for tests. Otherwise, a lot of headaches is in the near future. With separate test data, tests can be run separately without being dependent on another, and if there are problems, it’s easier to determine which test has failed and to determine if the problem arose from the test design or the data.

For example, consider having a social media website where a profile can have friends added. In this case, there are two simple tests that have to be done. The first one is checking the profile settings and information to see if they are logged correctly; and the latter, testing the friending function and the resulting messaging subfunction. When a friend is added, the website should enable the user to send a message to them, right? The first one, in this case, is independent of the latter, but the second one has to separate from the first one in that it does not care about the profile data, which means who is trying to send a message.

Decreasing Dependency on Different Environments

The most sophisticated software of today depends on different environments to work separately and correctly. There is various information the software is sending and receiving to and from the environments. Considering the previous social media example, there can be a server for the frontend part as well as a server for the core data of the different profiles. There can be another one for, say, photos or other stuff the profiles have published. Additionally, there can be other third-party servers to make connections to other social media websites. In the end, this all ties to all of them working together but separately, and correctly. If just one breaks the chain, the software falls apart. In order to test for some cases, the dependencies for some of these environments has to be limited.

There are 3 ways to do this:

- Limit calls to external systems

- Parameterize your connections

- Build a mocking server

These options do not simulate real-world conditions. And so, there must be tests done for real-world cases as well, so that there are no other issues. These options are basically fooling the software into thinking that it is getting a response from another environment. The faux responses gotten from the mocking servers help the software developers to see where the error stems from. Considering there are A, B and C servers to a software, by mocking the A server, the tests can check if there is an issue with the other parts. By checking for the other environments, the software can be divided into segments and developed more easily.

Complex Technology

There are multiple ways to build applications/software, and newer ways are still being created. Applications are just not the same as they were a year ago. This means, the procedures for testing also has to evolve with them. There are examples of frameworks or methodologies that provide a response, such as AngularJS and AJAX. These provide a much easier implementation that acts based on the responses received or not received. The application can keep checking for a response for a specific amount of time to act on that response. It can start an action when a response is received, or it even may stall until the response is received.

But, not all the frameworks or methodologies provide a response to make use of. So instead, these technologies can be imitated, and the application could be tricked into believing it has received a response. For example, an API can be replicated by mimicking all the behavior and the specifications, which makes it so that the app can be tested while the API is under development, and the logic of the application can be checked. The information being sent can also be imitated by creating fake objects.

In this article, the issues brought by flaky tests were considered, and methods to get rid of them were addressed. Flaky tests are trouble to software development and are a headache for the testers. They have to be dealt with but keep in mind that they can never completely disappear. Instead, they have to be reduced to a minor fragment of percentage. For a testing session with very little headache, check out Testinium.